Characterizing Collective Efforts in Content Sharing and Quality Control for ADHD-relevant Content on Video-sharing Platforms

ASSETS '25: The 27th International ACM SIGACCESS Conference on Computers and Accessibility

Hanxiu ‘Hazel’ Zhu, Avanthika Senthil Kumar, Sihang Zhao, Ru Wang, Xin Tong, and Yuhang Zhao

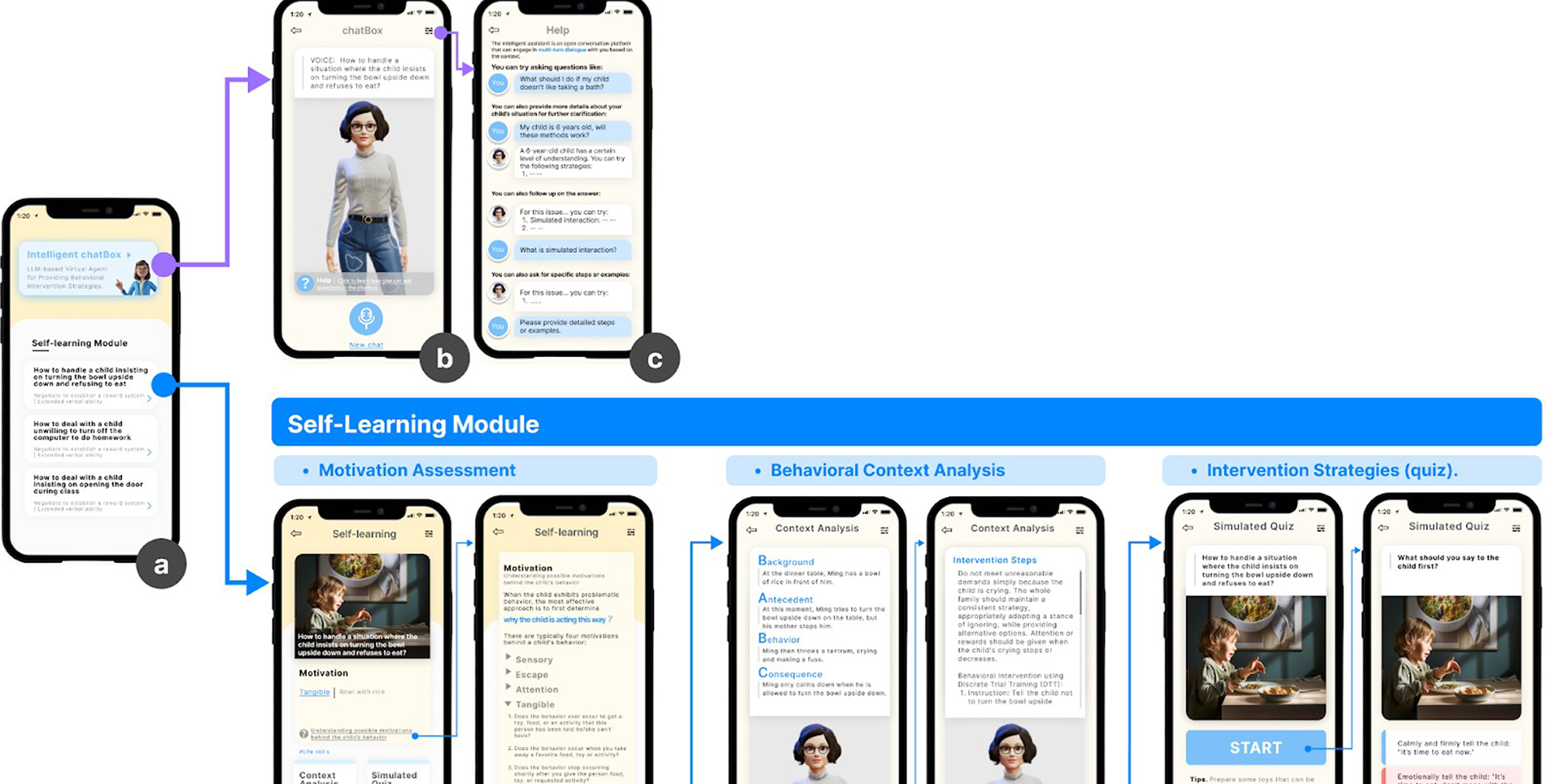

"I'm Not Confident in Debiasing AI Systems Since I Know Too Little": Hands-on Gender Bias Tutorials for AI Practitioners and Learners

ASIS&T '25: 88th Annual Meeting of the Association for Information Science & Technology

Kyrie Zhixuan Zhou, Jiaxun Cao, Xiaowen Yuan, Daniel E. Weissglass, Zachary Kilhoffer, Madelyn Rose Sanfilippo, Xin Tong

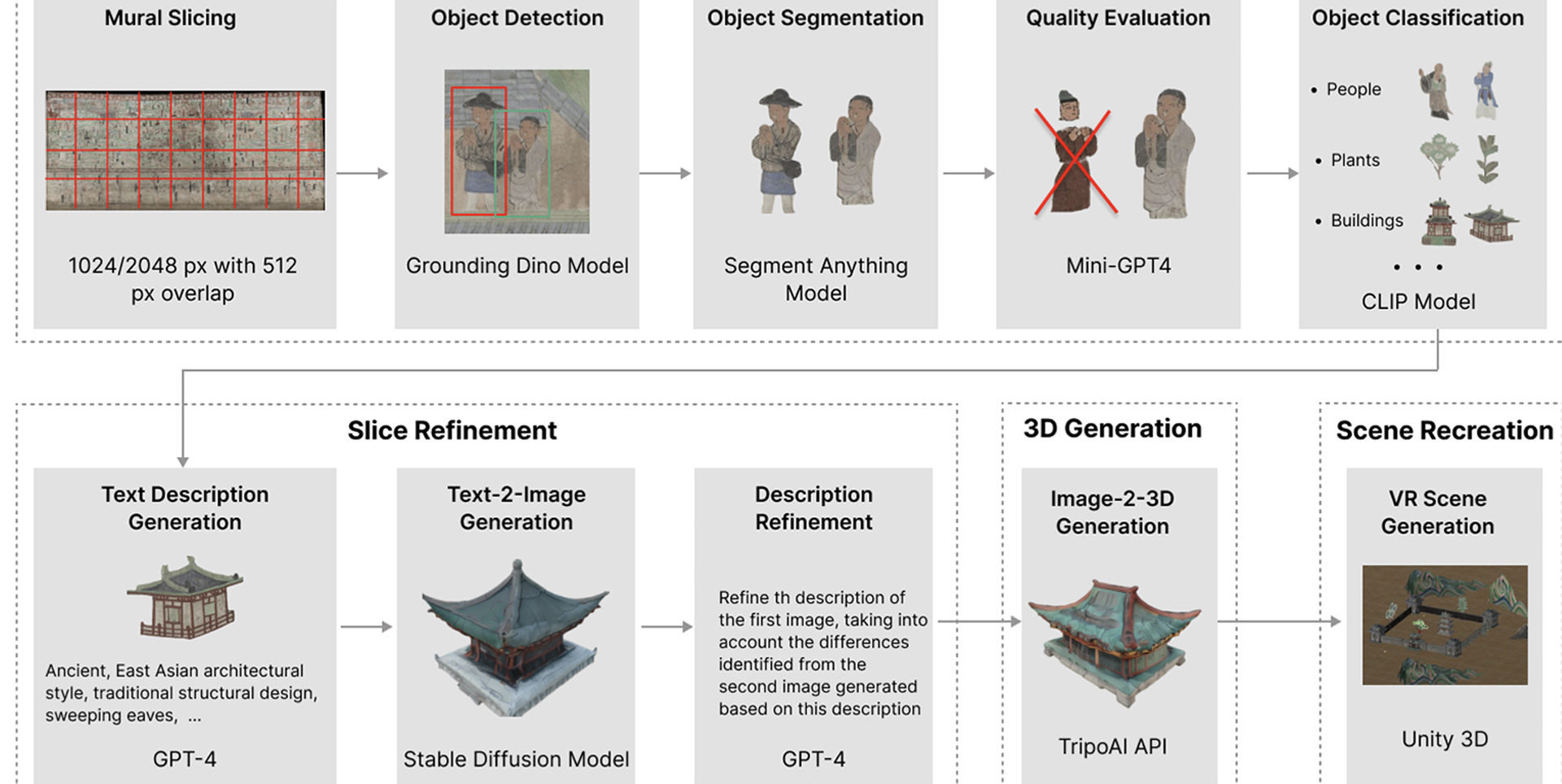

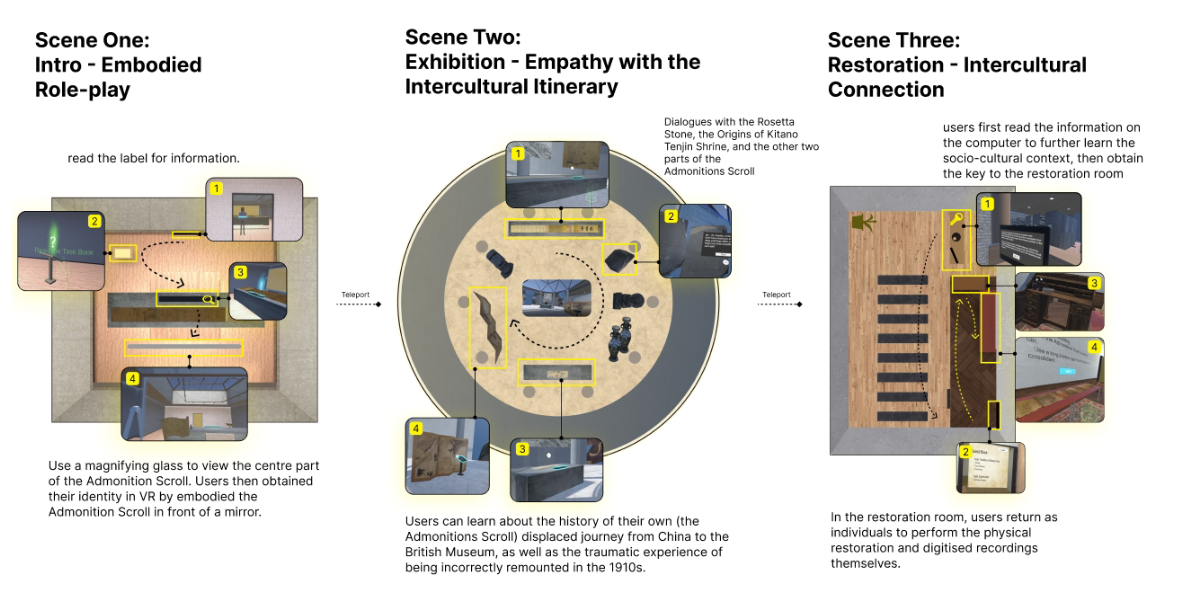

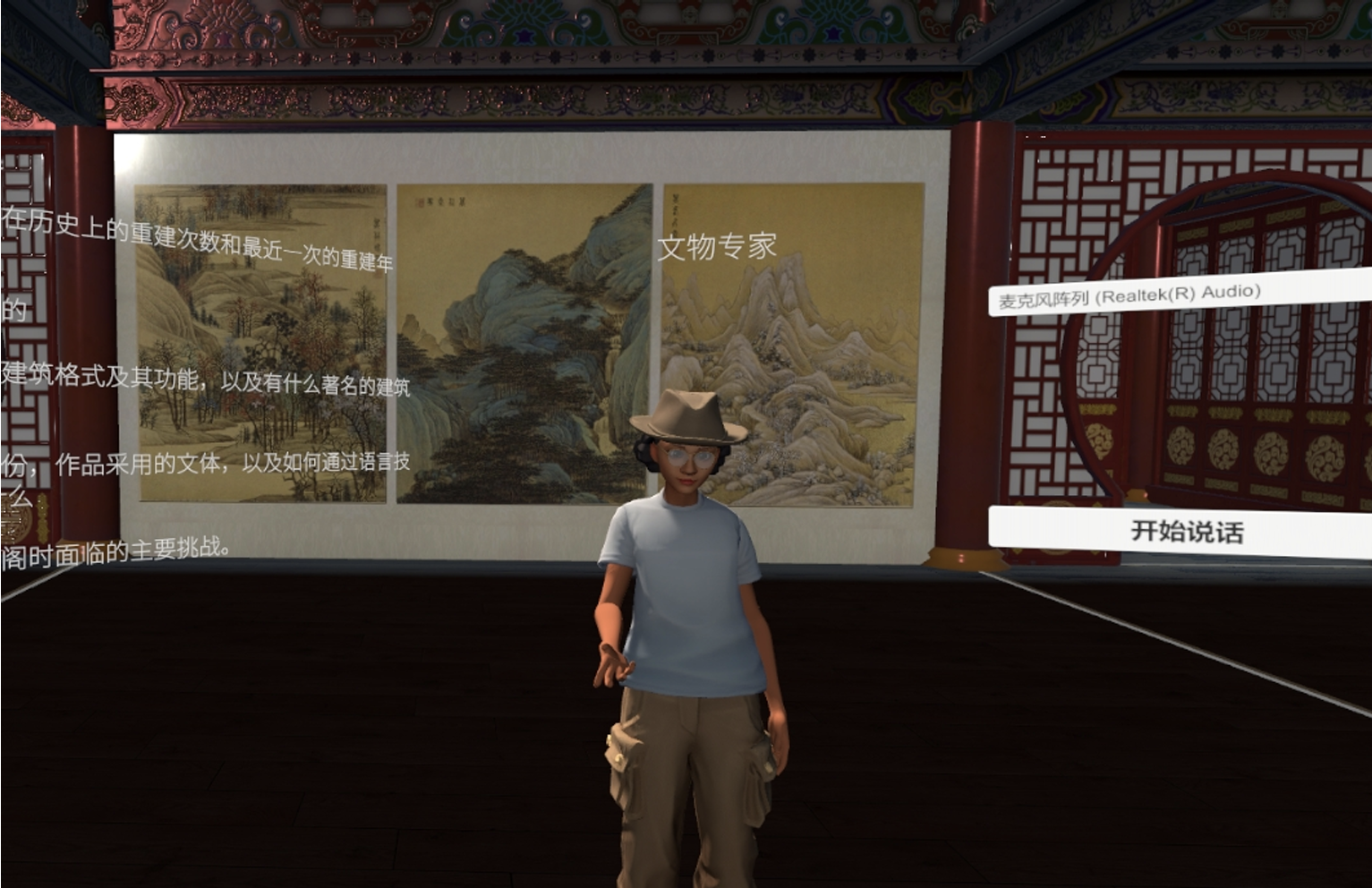

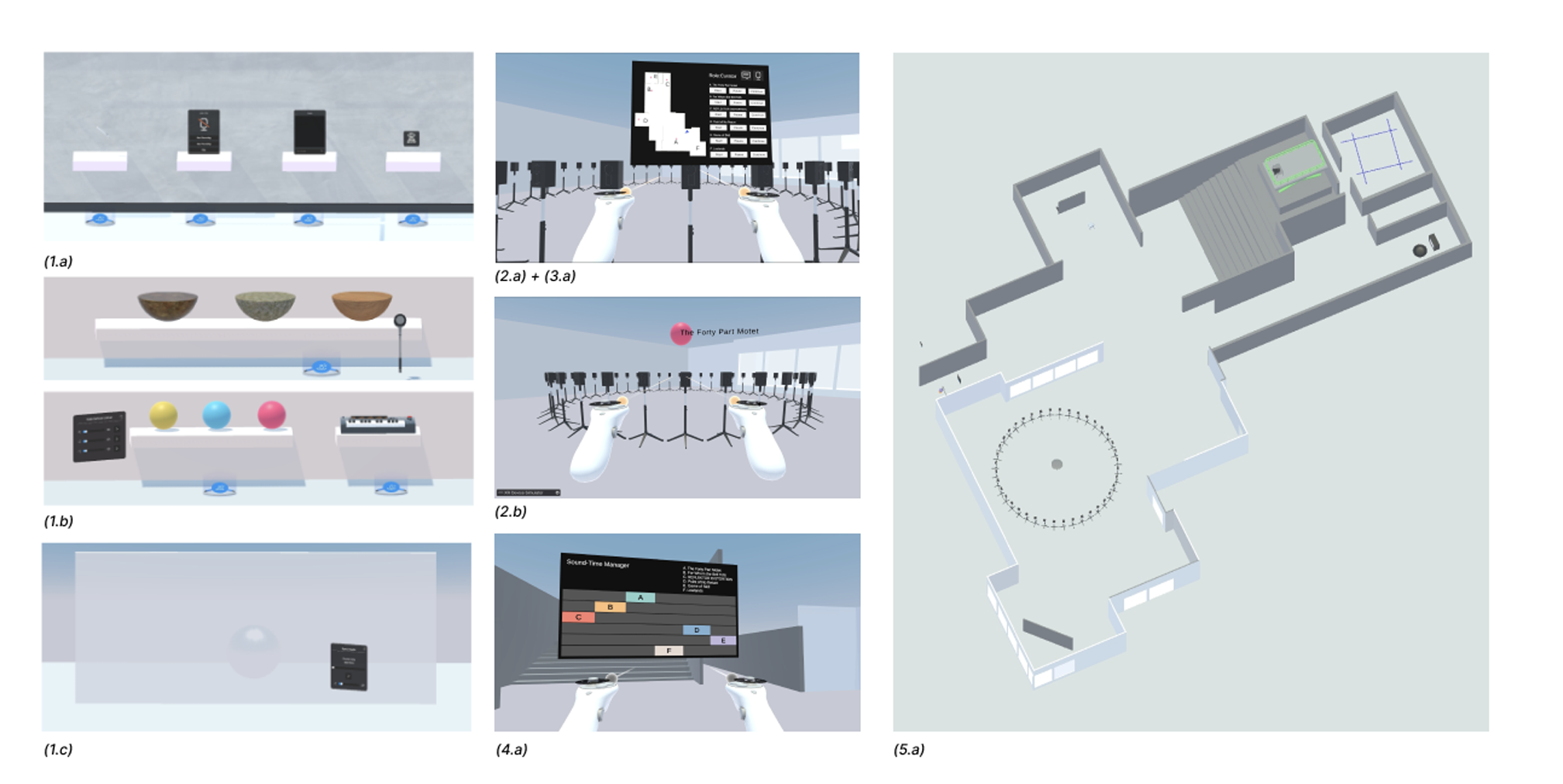

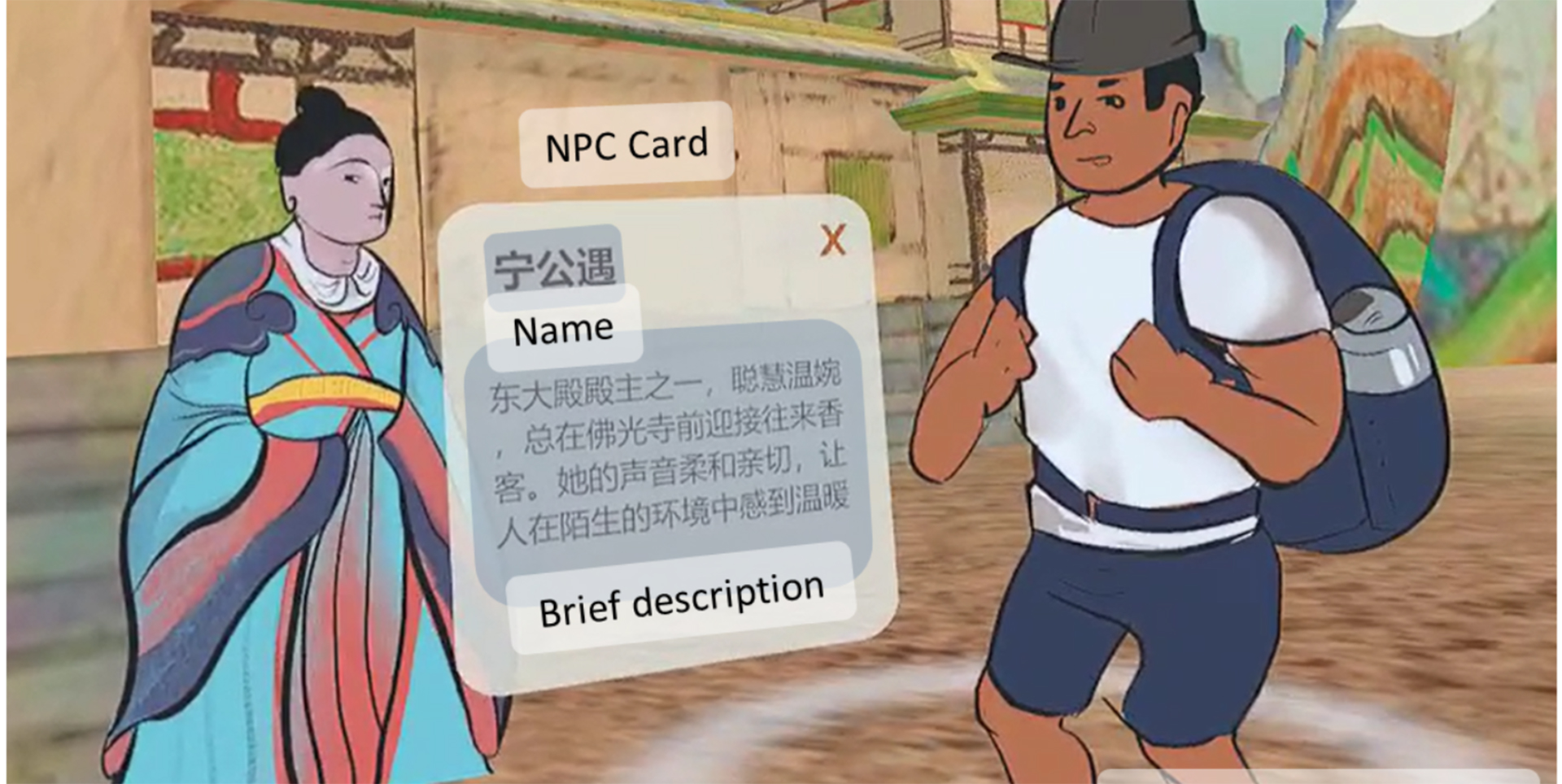

Immersive Biography: Supporting Intercultural Empathy and Understanding for Displaced Cultural Objects in Virtual Reality

CHI '25: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems

Ke Zhao, Ruiqi Chen, Xiaziyu Zhang, Chenxi Wang, Siling Chen, Xiaoguang Wang, Yujue Wang, and Xin Tong

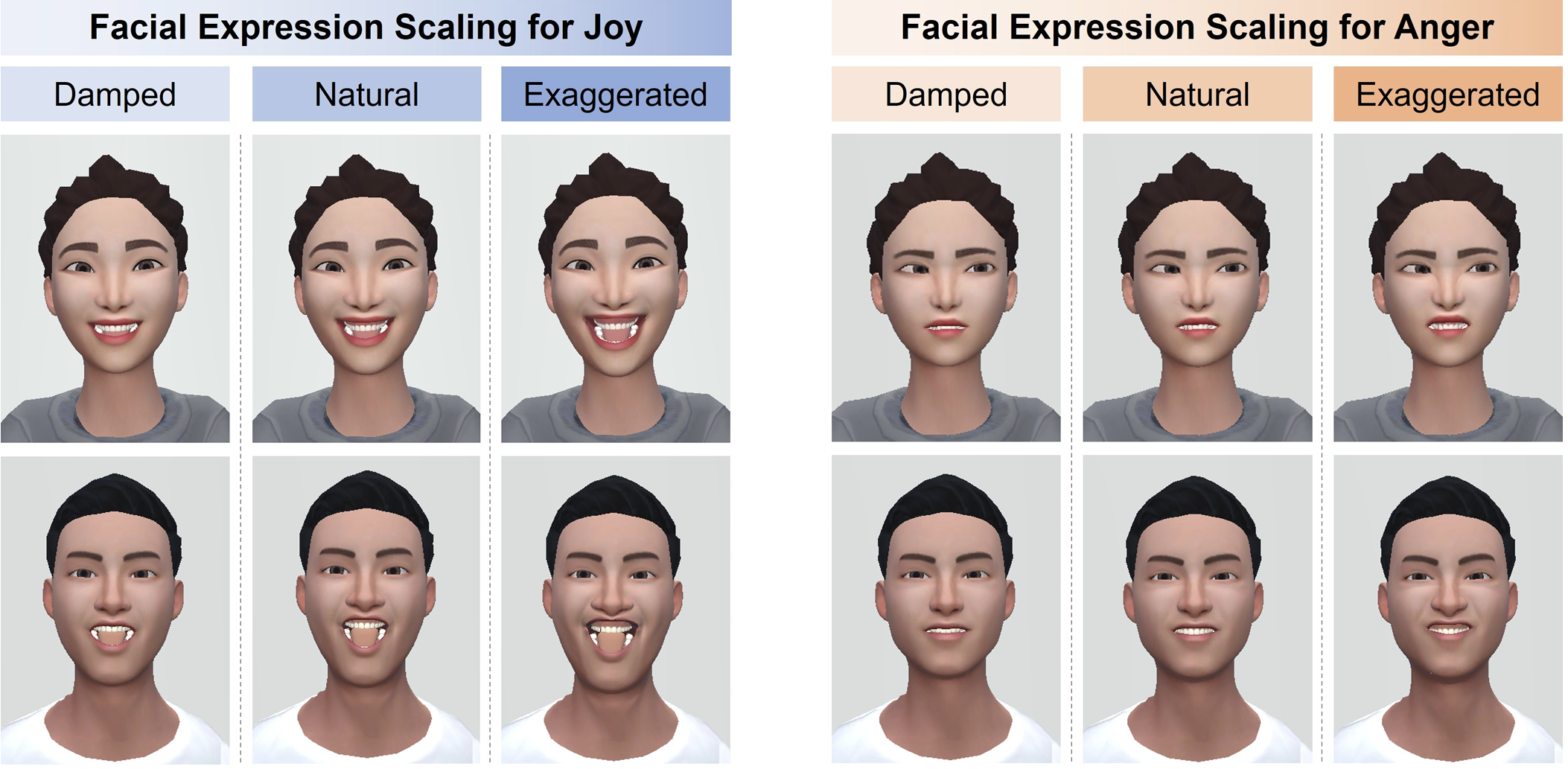

Raise Your Eyebrows Higher: Facilitating Emotional Communication in Social Virtual Reality Through Region-Specific Facial Expression Exaggeration

CHI '25: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems

Xueyang Wang, Sheng Zhao, Yihe Wang, Howard Ziyu Han, Xinge Liu, Xin Yi, Xin Tong, Hewu Li

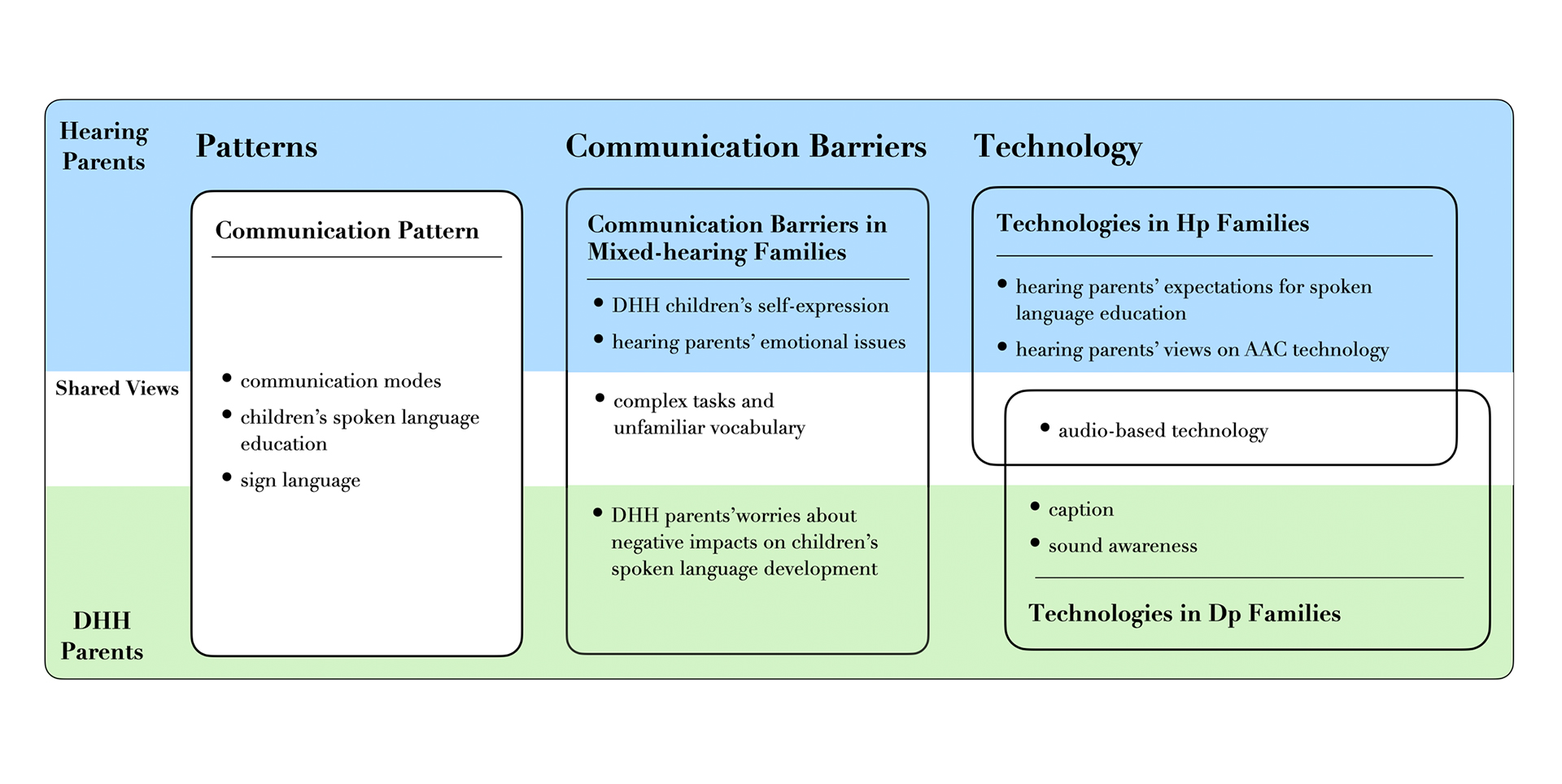

Parental Perceptions of Children's d/Deaf Identity Shaping Technology Use: A Qualitative Study on Communication Technologies in Mixed-hearing Families

CHI EA '25: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems

Keyi Zeng, Jingyang Lin, Ruiqi Chen, RAY LC, Pan Hui, and Xin Tong

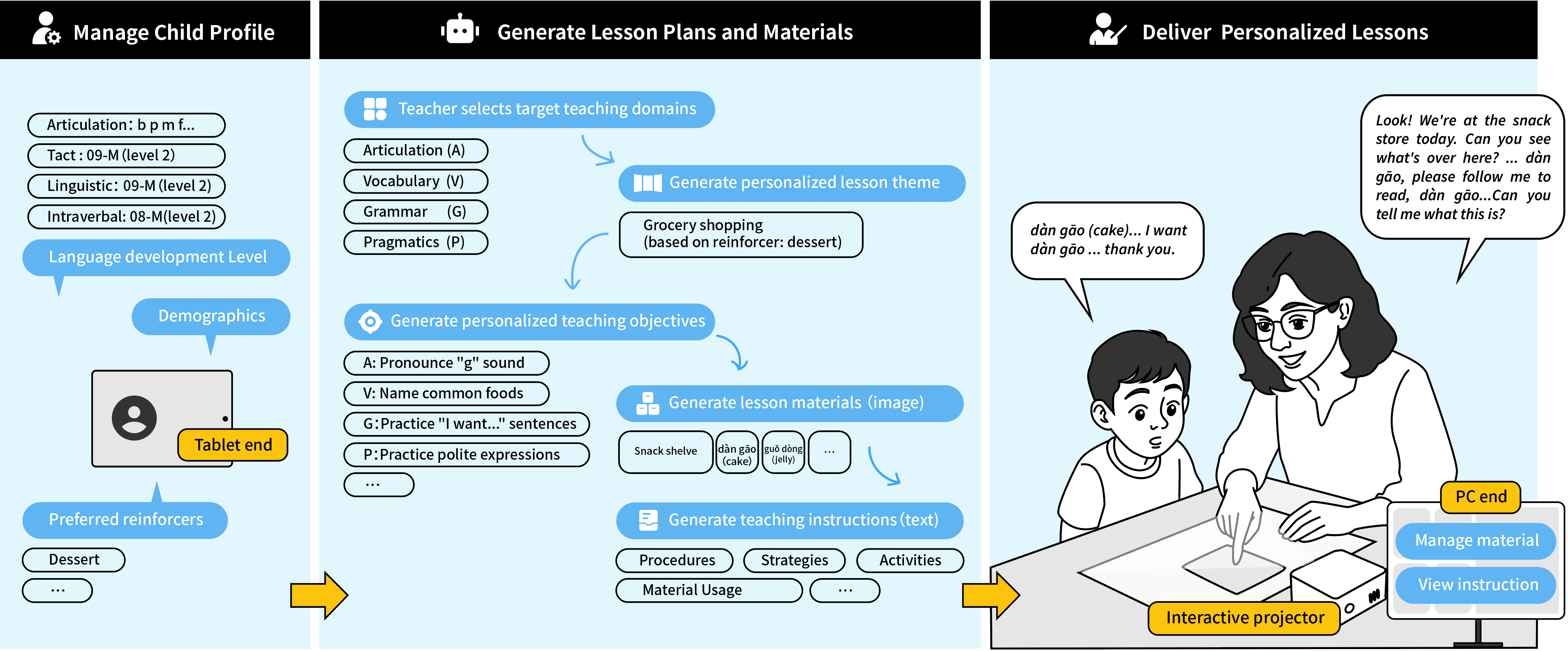

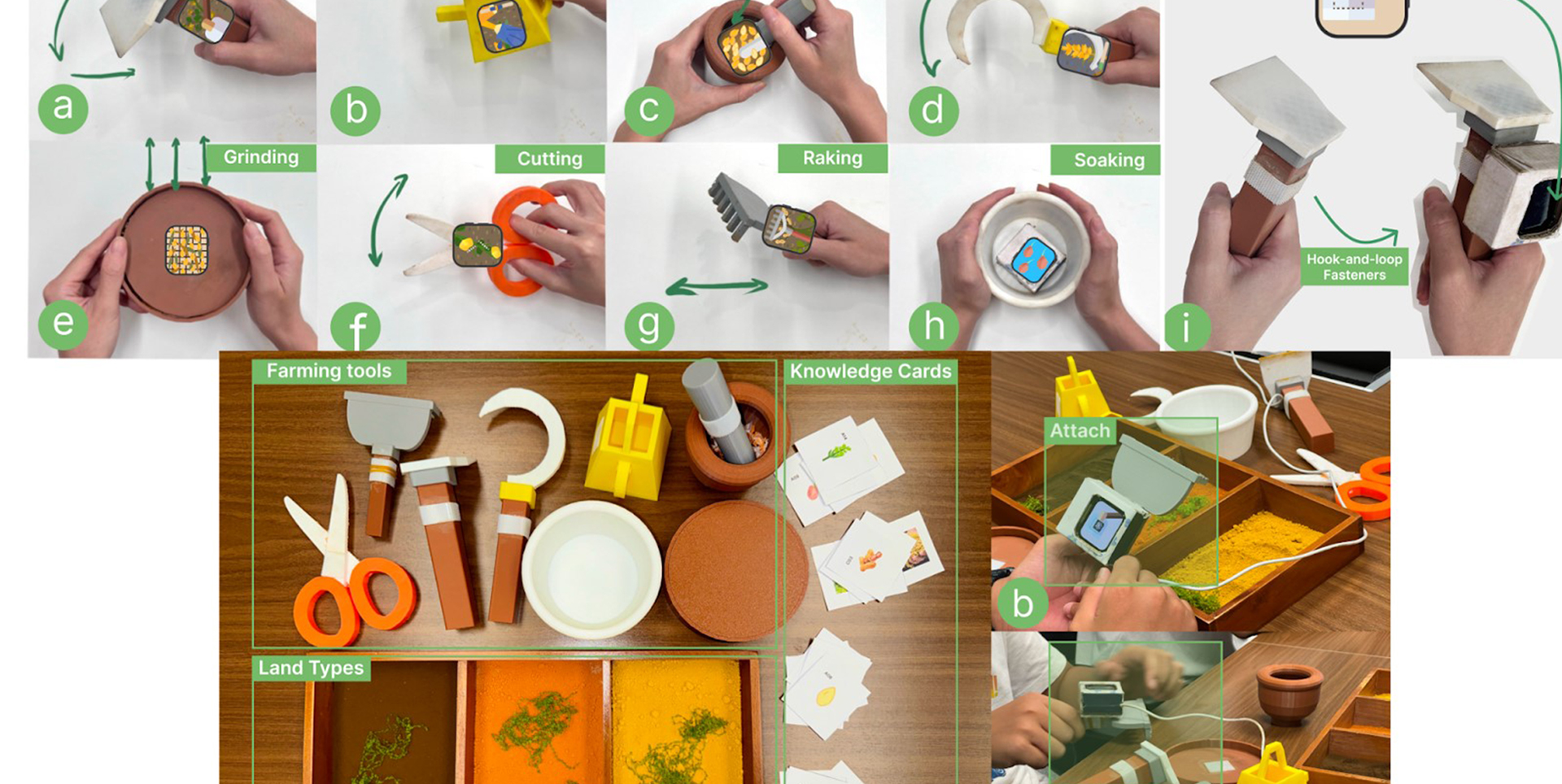

Technology-Mediated Non-pharmacological Interventions for Dementia: Needs for and Challenges in Professional, Personalized and Multi-Stakeholder Collaborative Interventions

CHI '24: Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems. 👑Best Paper.

Yuling Sun, Zhennan Yi, Xiaojuan Ma, Junyan Mao, and Xin Tong

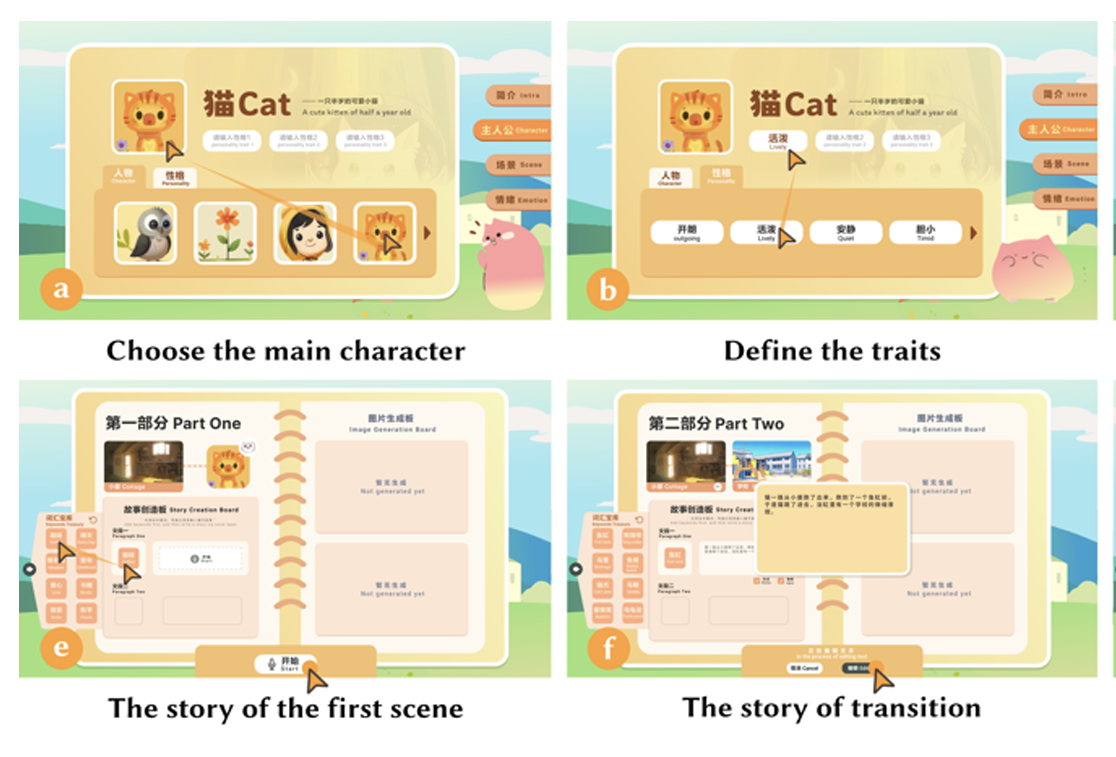

“I Keep Sweet Cats In Real Life, But What I Need In The Virtual World Is A Neurotic Dragon": Virtual Pet Designs With Personality Patterns

The Eleventh International Symposium of Chinese CHI (Chinese CHI 2023), Honorble Mention

Hongni Ye, Ruoxin You, Kaiyuan Lou, Yili Wen, Xin Yi, and Xin Tong.

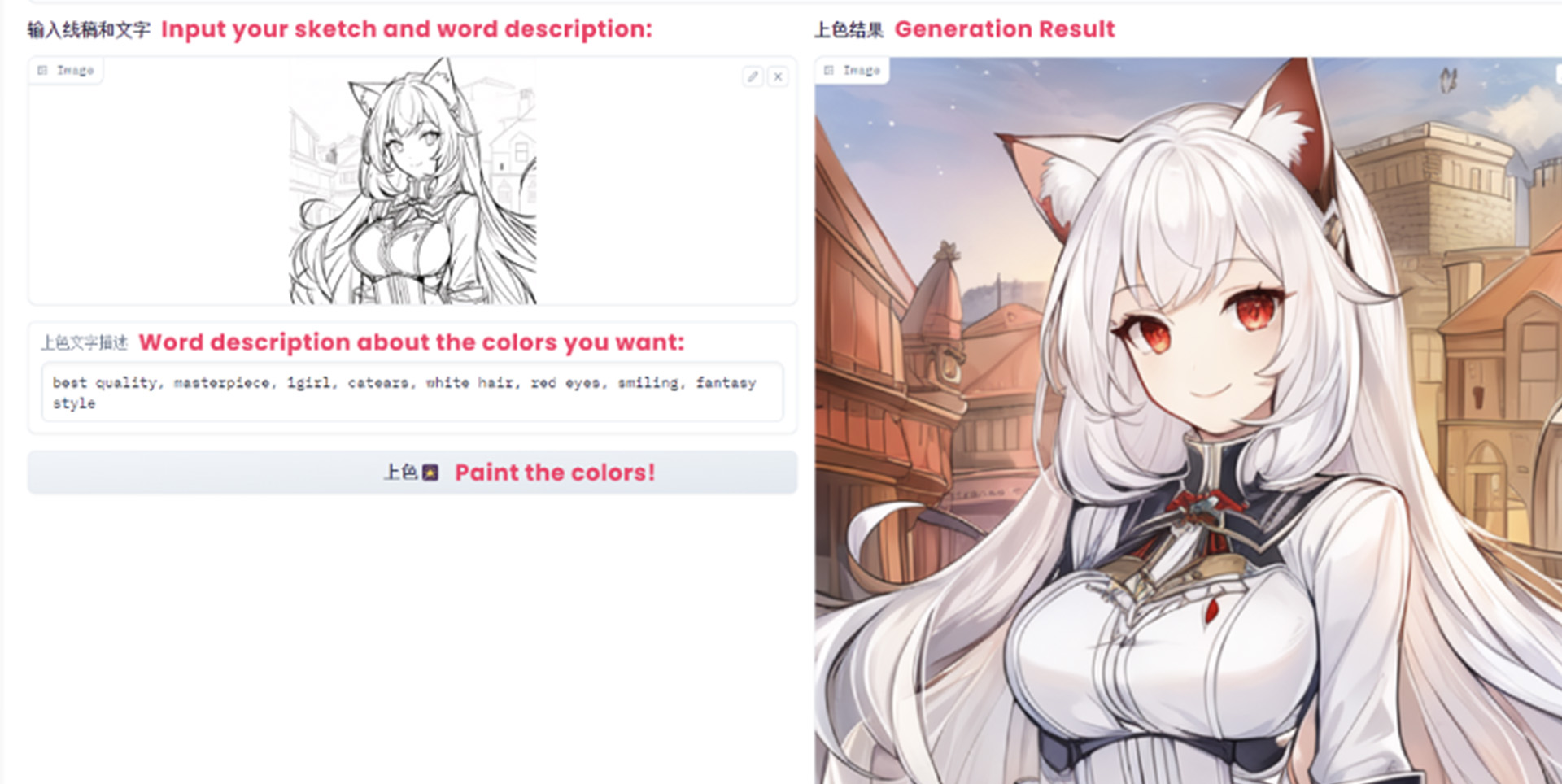

Exploring Designers’ Perceptions and Practices of Collaborating with Generative AI as a Co-creative Agent in a Multi-stakeholder Design Process: Take the Domain of Avatar Design as an Example

CHCHI '23: Proceedings of the Eleventh International Symposium of Chinese CHI.

Qingyang He, Weicheng Zheng, Hanxi Bao, Ruiqi Chen, and Xin Tong

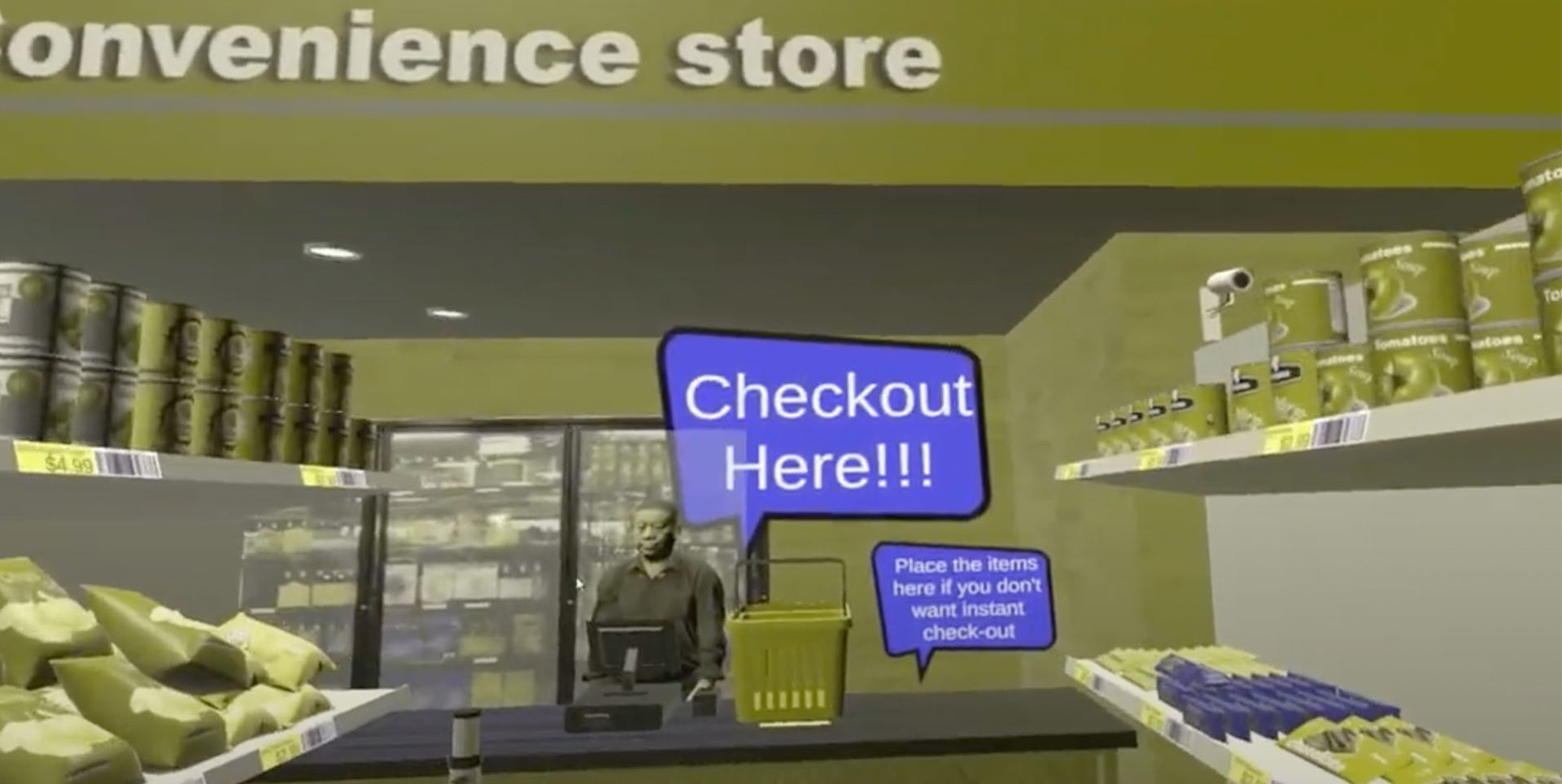

BlueVR: Design and Evaluation of a Virtual Reality Serious Game for Promoting Understanding towards People with Color Vision Deficiency

CHIPLAY'23: Proceedings of the ACM on Human-Computer Interaction, Volume 7, Issue CHI PLAY

Ruoxin You, Yihao Zhou, Weicheng Zheng, Yiran Zuo, Mayra Donaji Barrera Machuca, and Xin Tong.

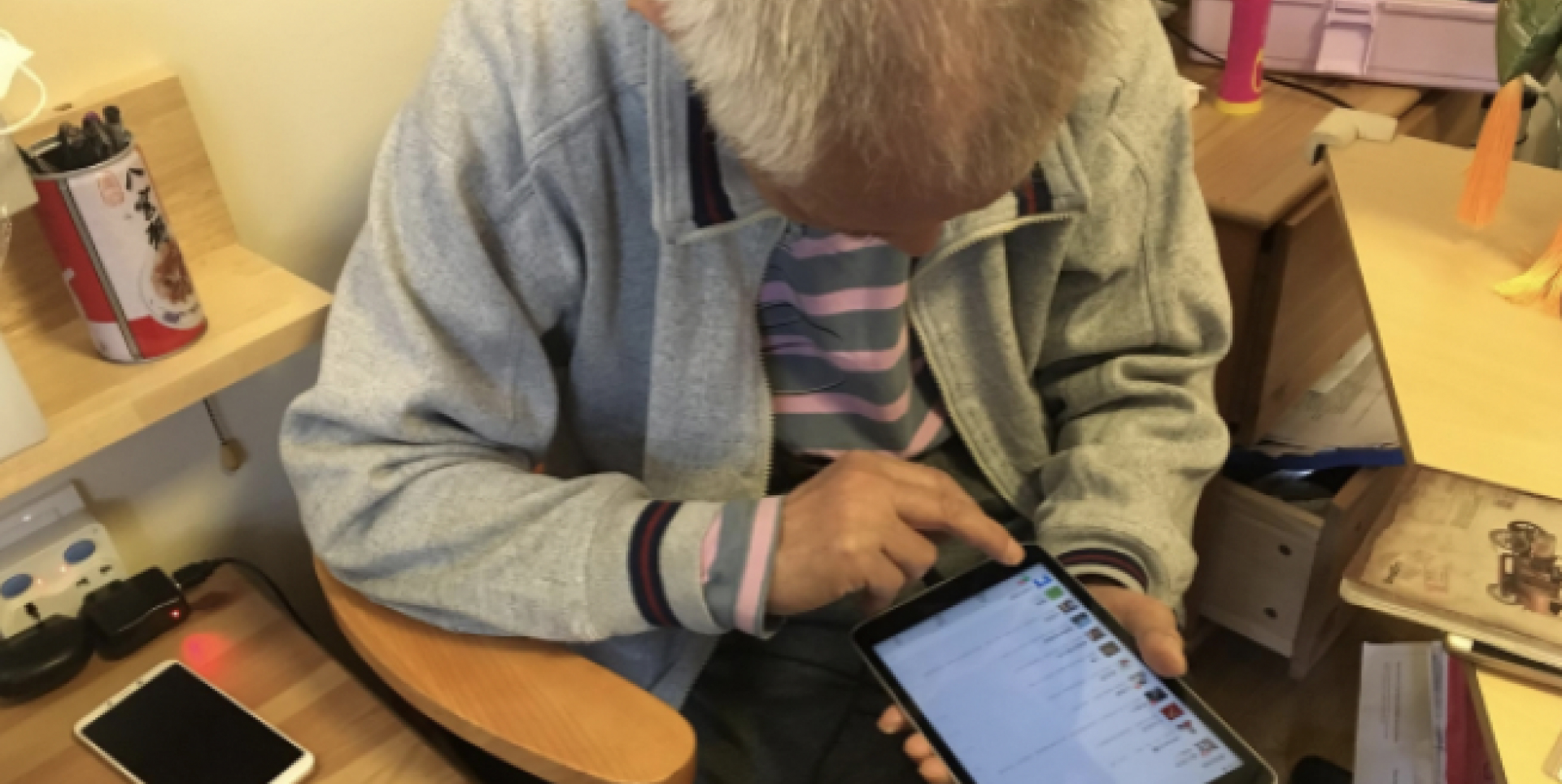

"I Never Imagined Grandma Could Do So Well with Technology": Evolving Roles of Younger Family Members in Older Adults' Technology Learning and Use

2022 Proceedings of the ACM on Human-Computer Interaction, Volume 6, Issue CSCW2

Xinru Tang, Yuling Sun, Bowen Zhang, Zimi Liu, RAY LC, Zhicong Lu, and Xin Tong.

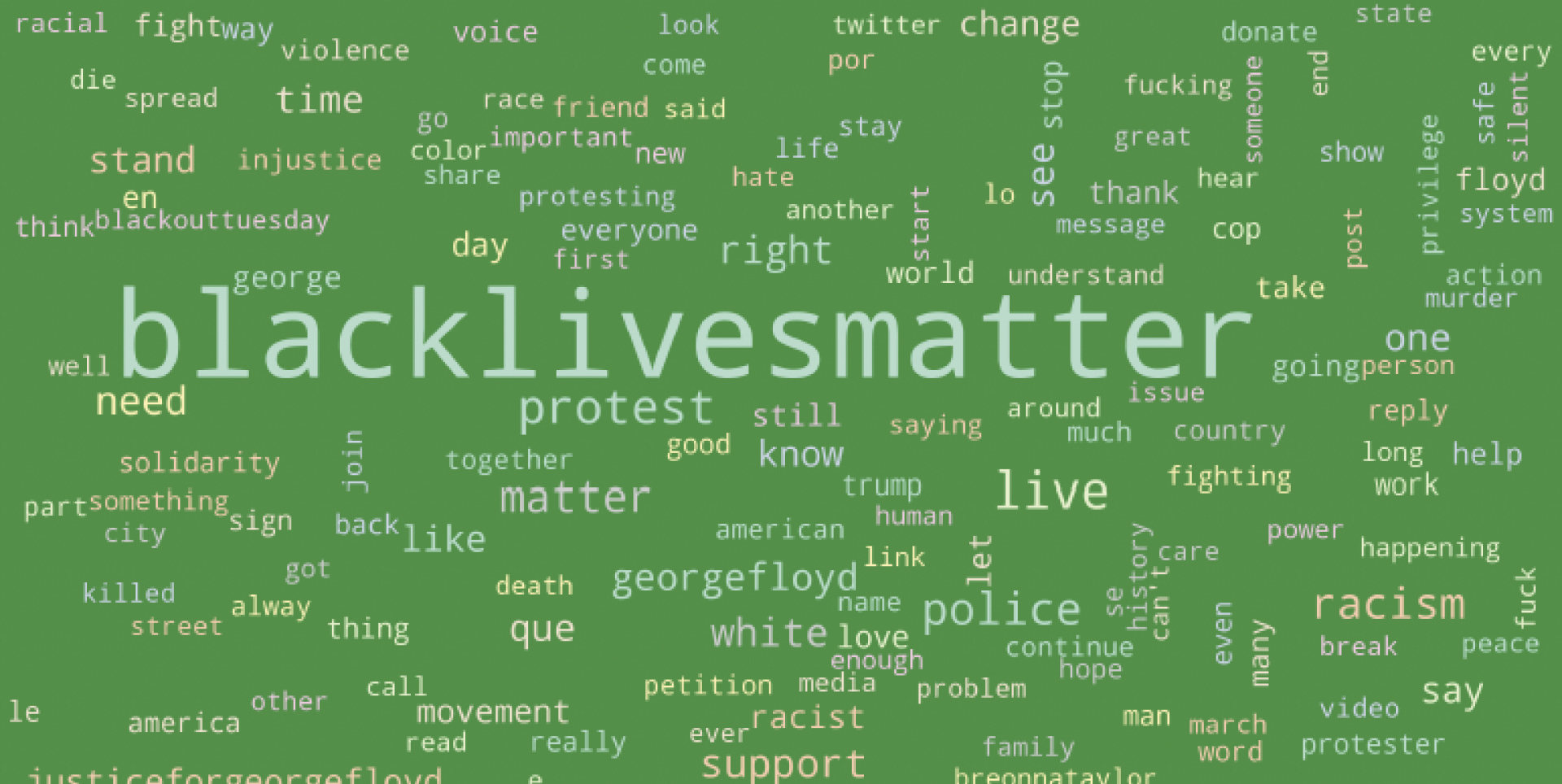

What are People Talking about in #BackLivesMatter and #StopAsianHate?: Exploring and Categorizing Twitter Topics Emerged in Online Social Movements through the Latent Dirichlet Allocation Model

AIES '22: Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society

Xin Tong, Yixuan Li, Jiayi Li, Rongqi Bei, and Luyao Zhang